Hadoop Eco security case

YARN is a unified resource management platform in the Hadoop system. It provides a framework for job scheduling and cluster resource management within Hadoop.

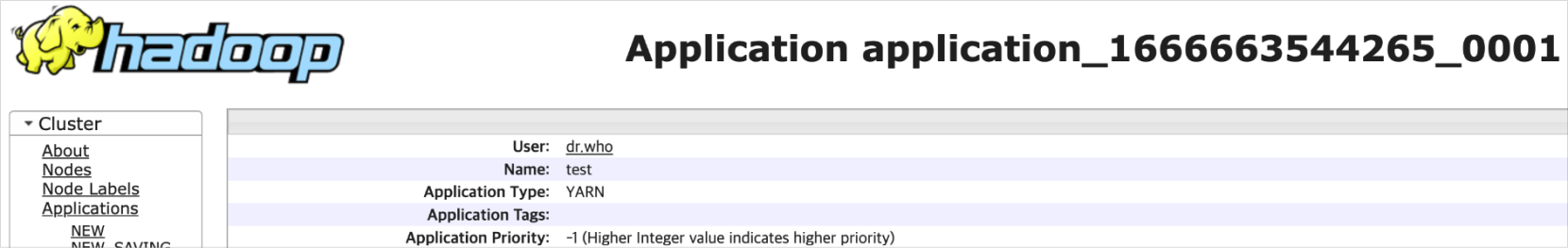

When executing a job through the YARN REST API, it opens the default port 8088. At this point, the default user is set to dr.who, and jobs are executed under this user.

ACL is not verified in this process, which means an external attacker could exploit the open 8088 port to run commands on the server or download malicious scripts.

Default job executed as user: dr.who

All Hadoop Eco clusters operating in a public cloud environment may be subject to malicious external attacks.

Therefore, it is recommended to either block REST API calls entirely or apply appropriate security measures when using REST APIs.

Restrict REST API calls

To defend against malicious external attacks, you can restrict job execution through the REST API.

From Hadoop Eco version 2.10.1, the ability to restrict job submissions via REST API is supported.

By default, this setting is enabled (true), allowing REST API calls.

-

To disable REST API calls, edit the

/etc/hadoop/conf/yarn-site.xmlfile and setyarn.webapp.enable-rest-app-submissionsto false, then restart the ResourceManager.Restrict REST API job submission<property>

<name>yarn.webapp.enable-rest-app-submissions</name>

<value>false</value>

</property>Parameter Description yarn.webapp.enable-rest-app-submissions Whether to allow REST API submissions

-true(default): allow calls

-false: block calls -

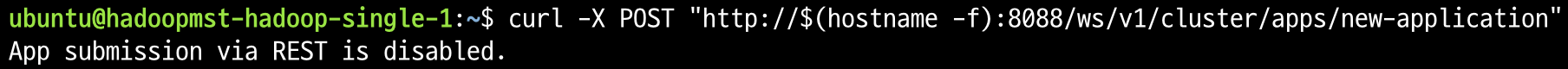

When job submission is blocked via this option, the following message is displayed during REST API submission:

Job submission blocked via REST API

Security measures when using REST API

If REST API usage is necessary, take the following precautions to avoid vulnerabilities.

When users must execute jobs via the REST API, consider the measures below.

Option 1. Keep port 8088 private

The first method is to avoid exposing port 8088 to the public.

Hadoop Eco does not assign public IPs by default, so unless a VM has a public IP, it cannot be accessed externally.

Ensure that no public IP is assigned to the VM.

-

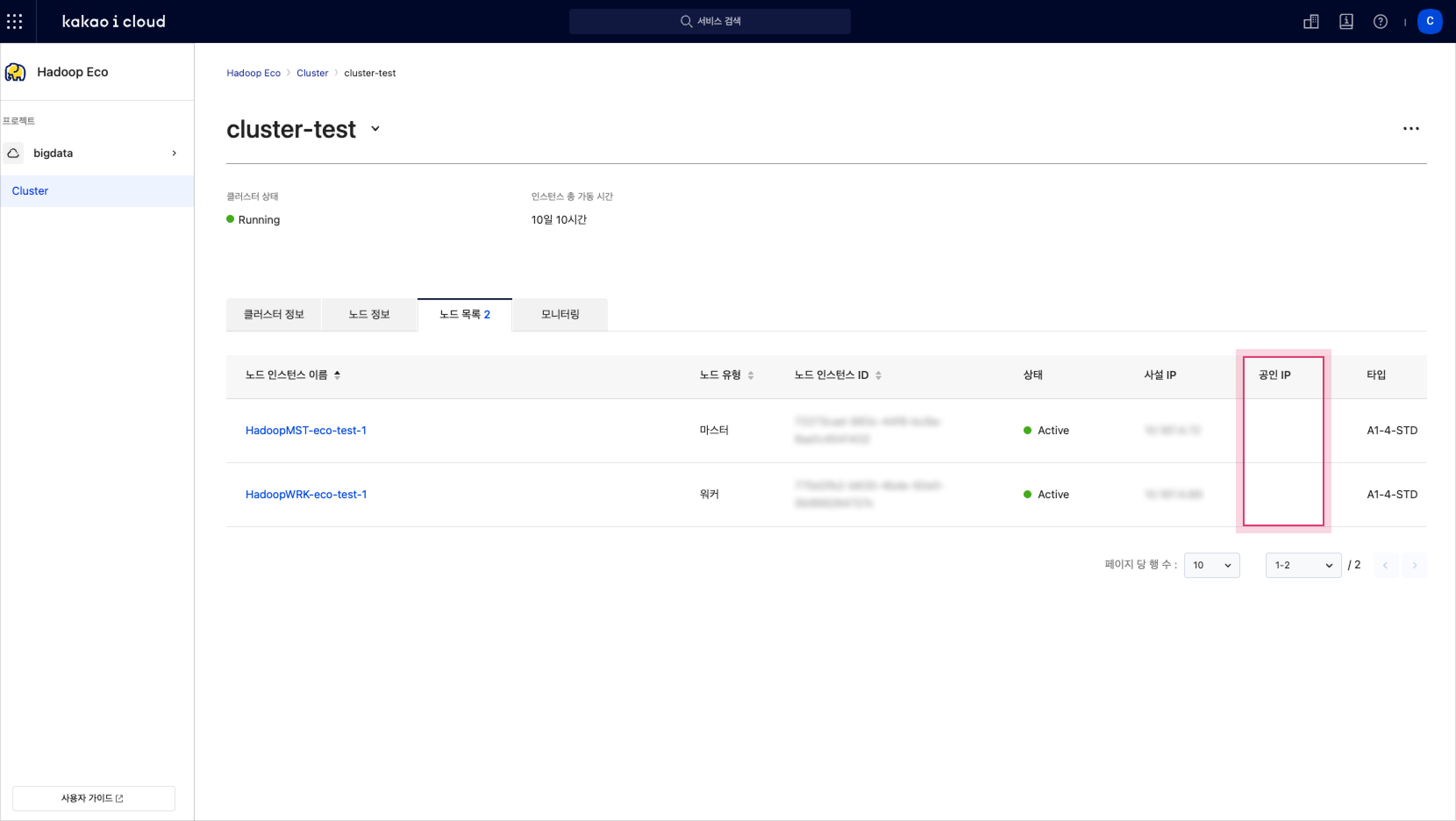

Go to the KakaoCloud console > Analytics > Hadoop Eco.

-

In the Cluster list, select the cluster to manage.

-

Go to the Node list tab and review each node.

-

Check that the public IP column is empty.

Check public IP in node list

Option 2. Modify inbound rules

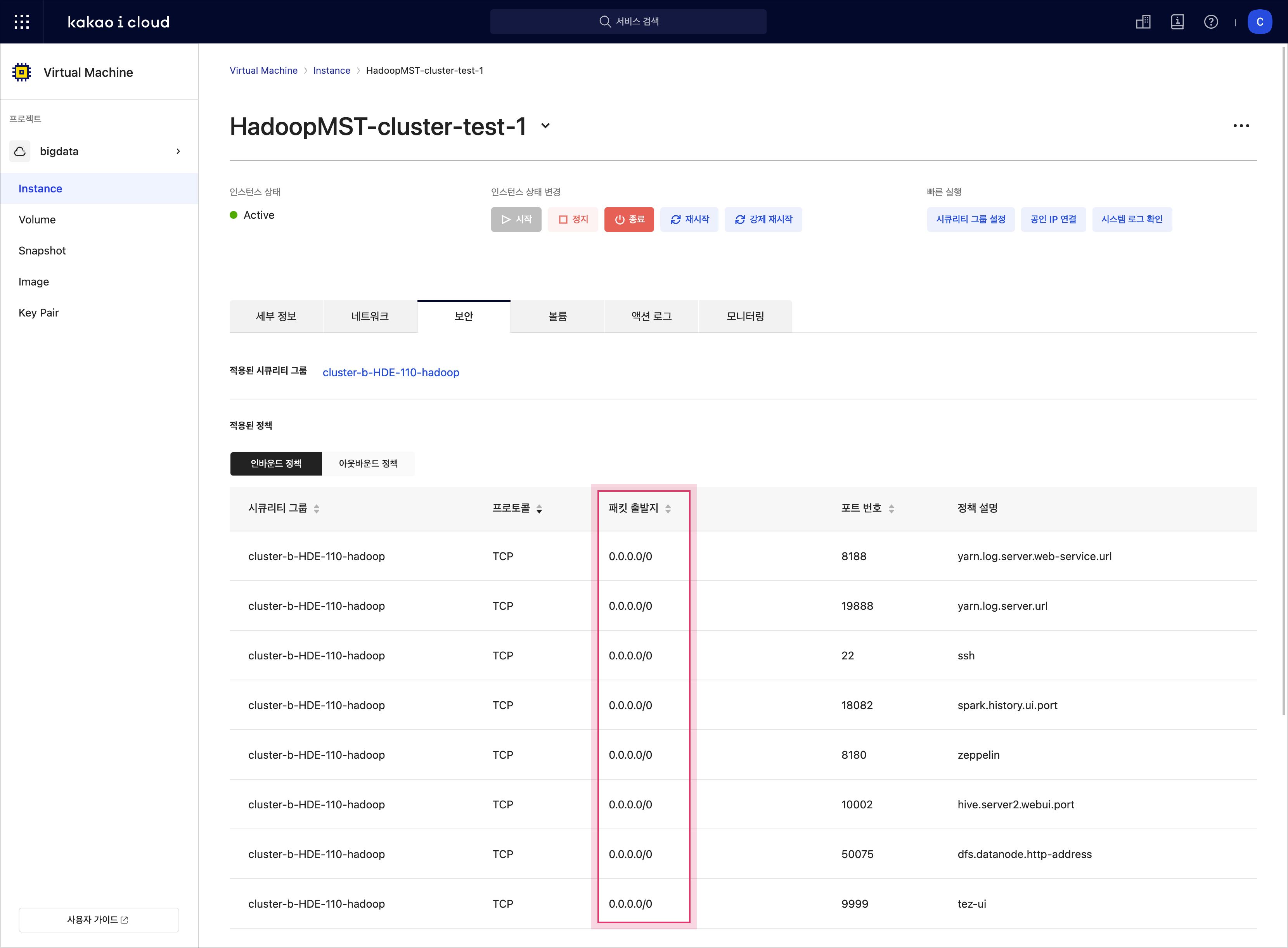

The second method is to change inbound firewall rules.

Instead of using the default open setting of 0.0.0.0/0, configure it to allow access only from specific source IPs.

This can be done by modifying the security group rules in your VPC.

-

Go to the KakaoCloud console > Analytics > Hadoop Eco.

-

In the Cluster list, select the target cluster.

-

Go to the Node list tab.

-

Select the instance name of the node.

-

In the instance details page, go to the Security tab.

-

Under the Inbound section, check the source IP.

-

Select the applied security group to go to the VPC > Security tab.

-

Under Inbound Rules, select Manage Inbound Rules to make changes.

Check packet source in Inbound tab

Option 3. Change ResourceManager port

The third method is to change the ResourceManager port if it must be publicly accessible.

Note that changing the port may cause issues with cluster scaling operations.

To change the port, modify the following in yarn-site.xml and restart the ResourceManager.

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>127.0.0.1:8088</value>

</property>