GPU Instance

GPU (Graphics Processing Unit) instances provide a GPU-based environment with access to thousands of computing cores. This enables the implementation of various use cases, such as training models on large datasets at high speeds or running high-performance graphics applications. For example, in fields like image recognition, voice recognition, natural language processing, and game development, GPU-based instances offer high levels of performance and processing speed, saving time and cost while ensuring high levels of accuracy. Furthermore, these GPU instances allow for large-scale distributed processing, enabling fast data processing and storage.

- Applicable types:

p2a,p2i,gn1i,p1i

p2a

p2a instances are powered by 3rd generation AMD EPYC 7003 series processors and equipped with NVIDIA A100 Tensor Core GPUs, making them suitable for high-performance computing (HPC) workloads.

In Bare Metal Server instances (e.g. p2a.baremetal), applications can directly access the physical resources of the host server, such as processors, memory, and network.

Currently, only Bare Metal Server instances are available.

Hardware specifications

- Up to 3.65GHz 3rd generation AMD EPYC processor (AMD EPYC 7513)

- Up to 50Gbps network bandwidth

- Instance sizes supporting up to 128 vCPUs and 1,536GiB memory

- Up to 8 NVIDIA A100 Tensor Core GPUs

- Support for AMD instruction set (AVX, AVX2)

Detailed information

| Instance size | GPU | vCPU | Memory (GiB) | Network bandwidth (Gbps) |

|---|---|---|---|---|

p2a.baremetal | 8 | 128 | 1536 | 50 |

p2i

p2i instances are powered by 3rd generation Intel Xeon Scalable processors and are suitable for general-purpose GPU computing.

Hardware specifications

- Up to 3.2GHz 3rd generation Intel Xeon Scalable processor (Ice Lake 6338)

- Up to 50Gbps network bandwidth

- Instance sizes supporting up to 96 vCPUs and 768GB memory

- Up to 4 NVIDIA A100 Tensor Core GPUs

- Support for Intel instruction set (AVX, AVX2, AVX-512)

- Support for Intel Turbo Boost Technology 2.0

Detailed information

| Instance size | GPU | vCPU | Memory (GiB) | Network bandwidth (Gbps) |

|---|---|---|---|---|

p2i.6xlarge | 1 | 24 | 192 | Max 12.5 |

p2i.12xlarge | 2 | 48 | 384 | Max 25 |

p2i.24xlarge | 4 | 96 | 768 | Max 50 |

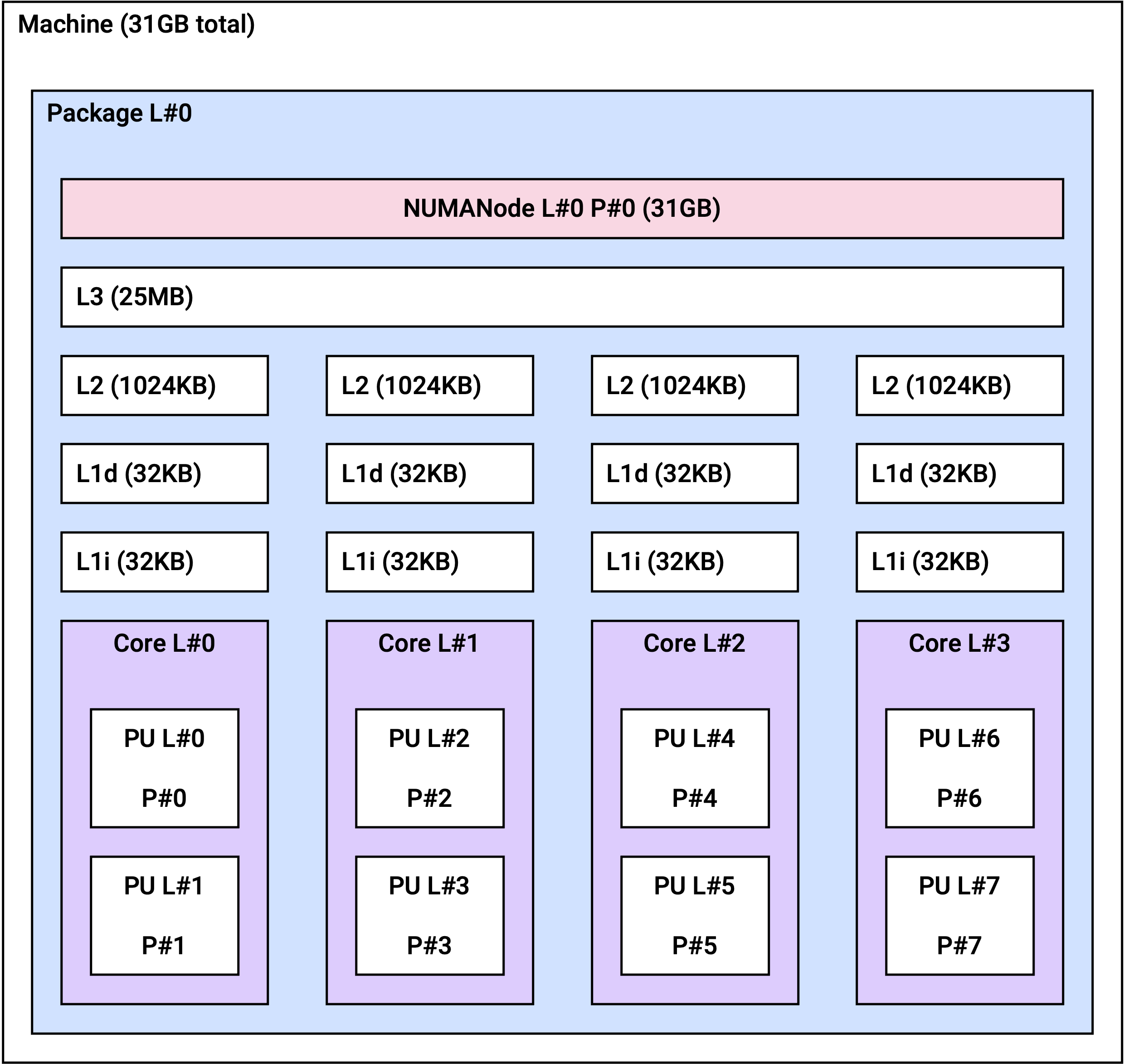

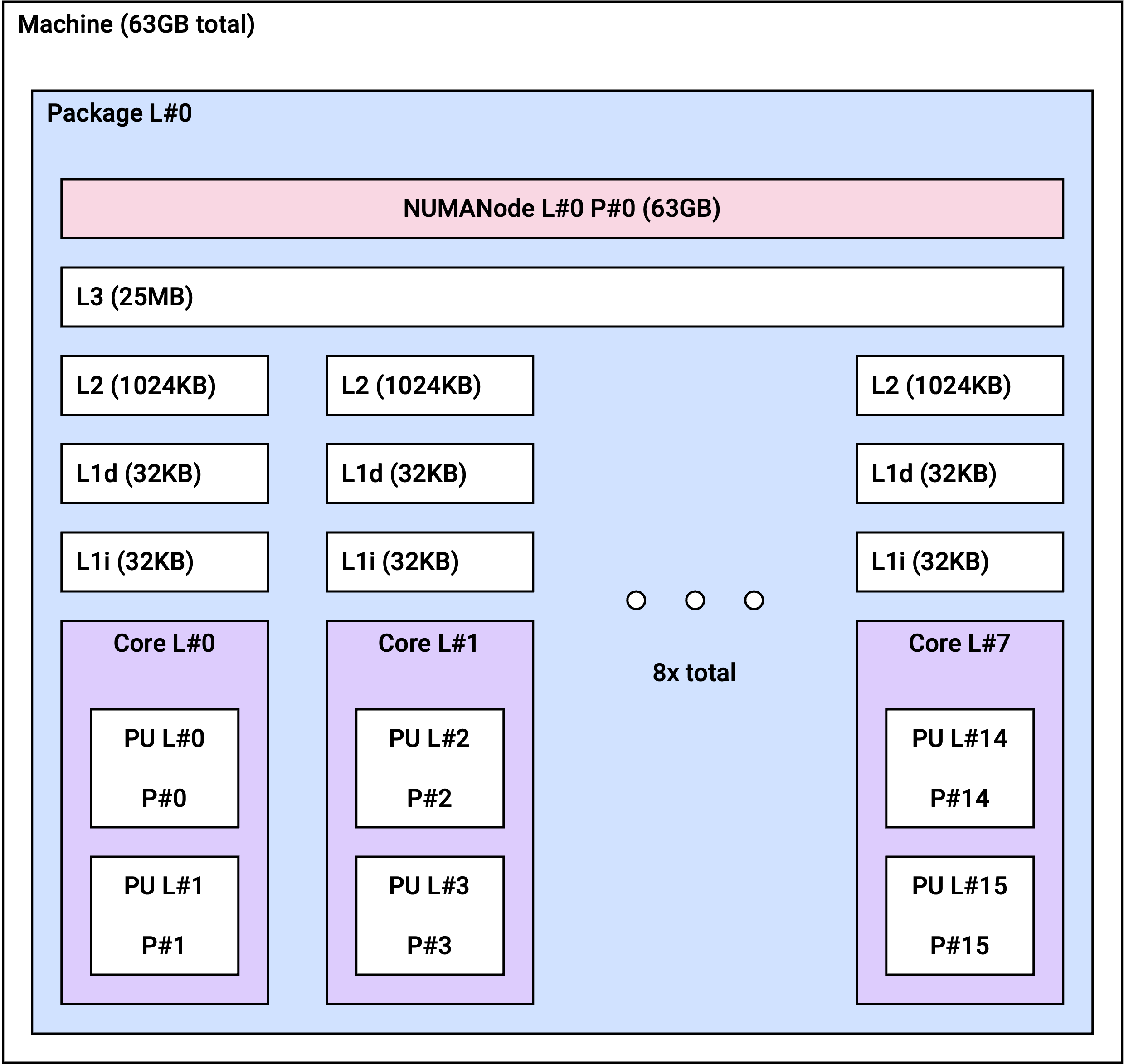

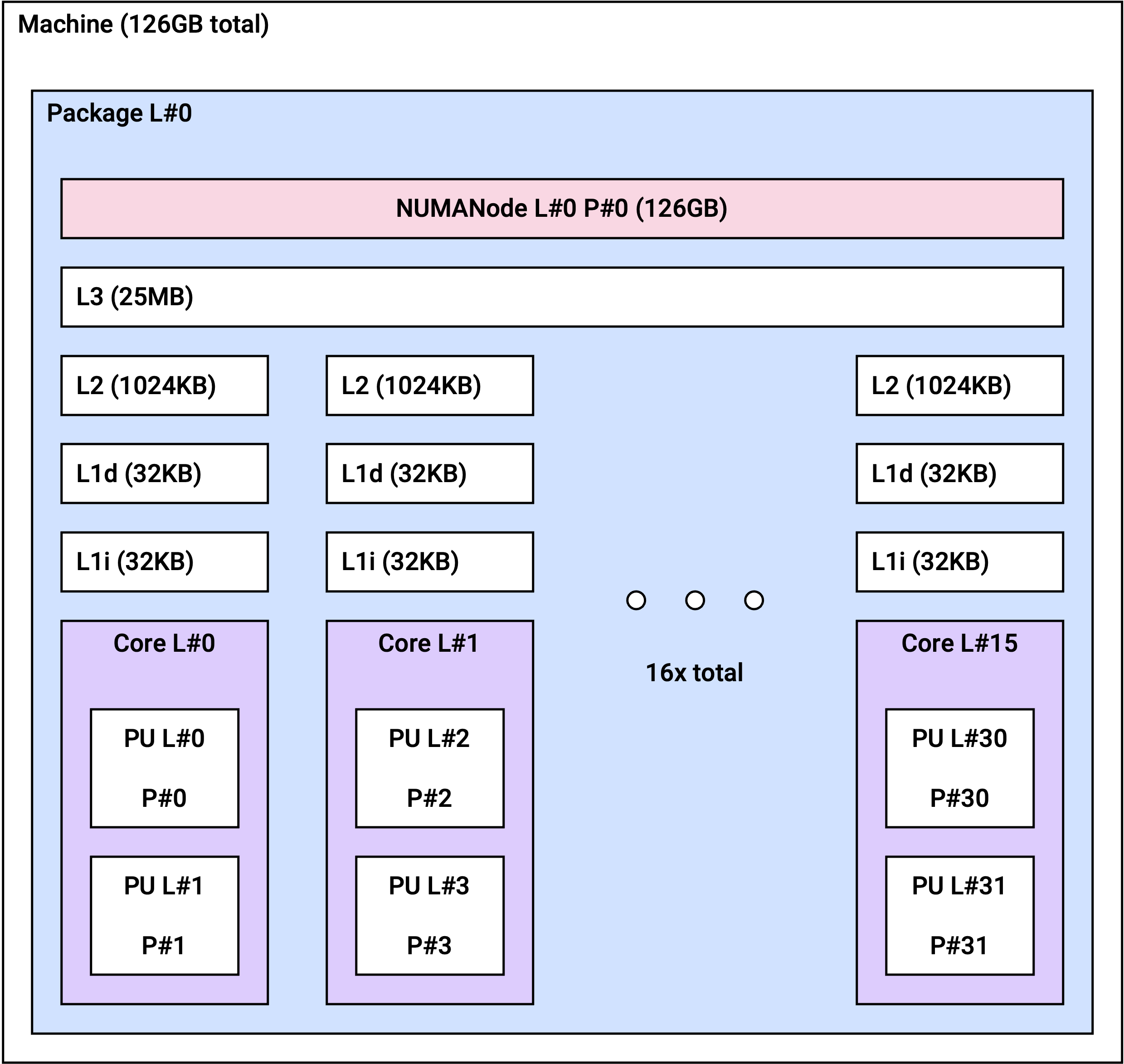

NUMA topology

In Non-Uniform Memory Access (NUMA) architecture, each CPU can access its own allocated memory (local memory). NUMA architecture enables high scalability by allowing multiple processors to share memory.

p2i instances have the following NUMA topology depending on the instance size.

| Instance size | Number of NUMA domains | Cores per NUMA domain |

|---|---|---|

p2i.6xlarge | 1 | 12 |

p2i.12xlarge | 1 | 24 |

p2i.24xlarge | 2 | 24 |

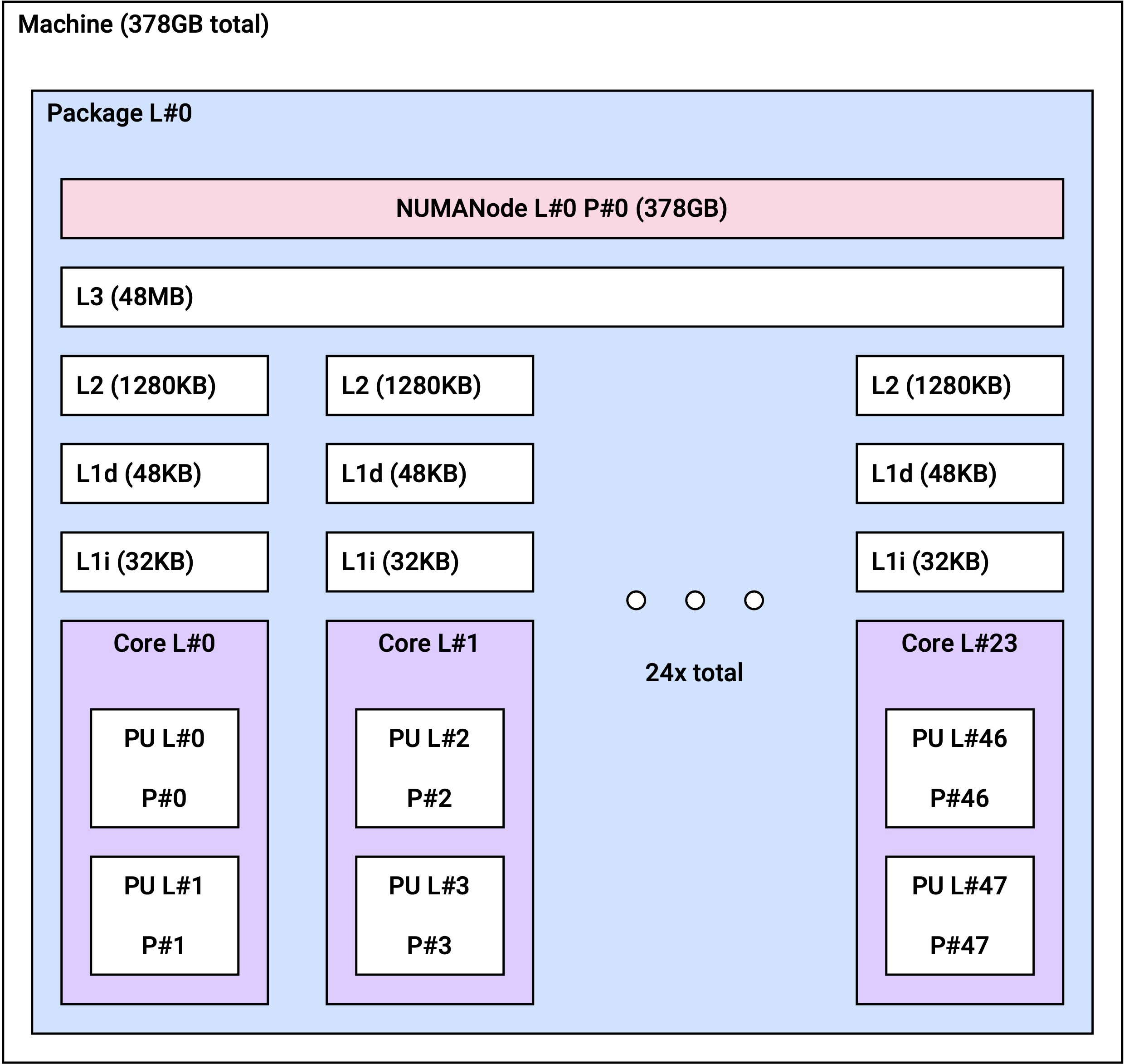

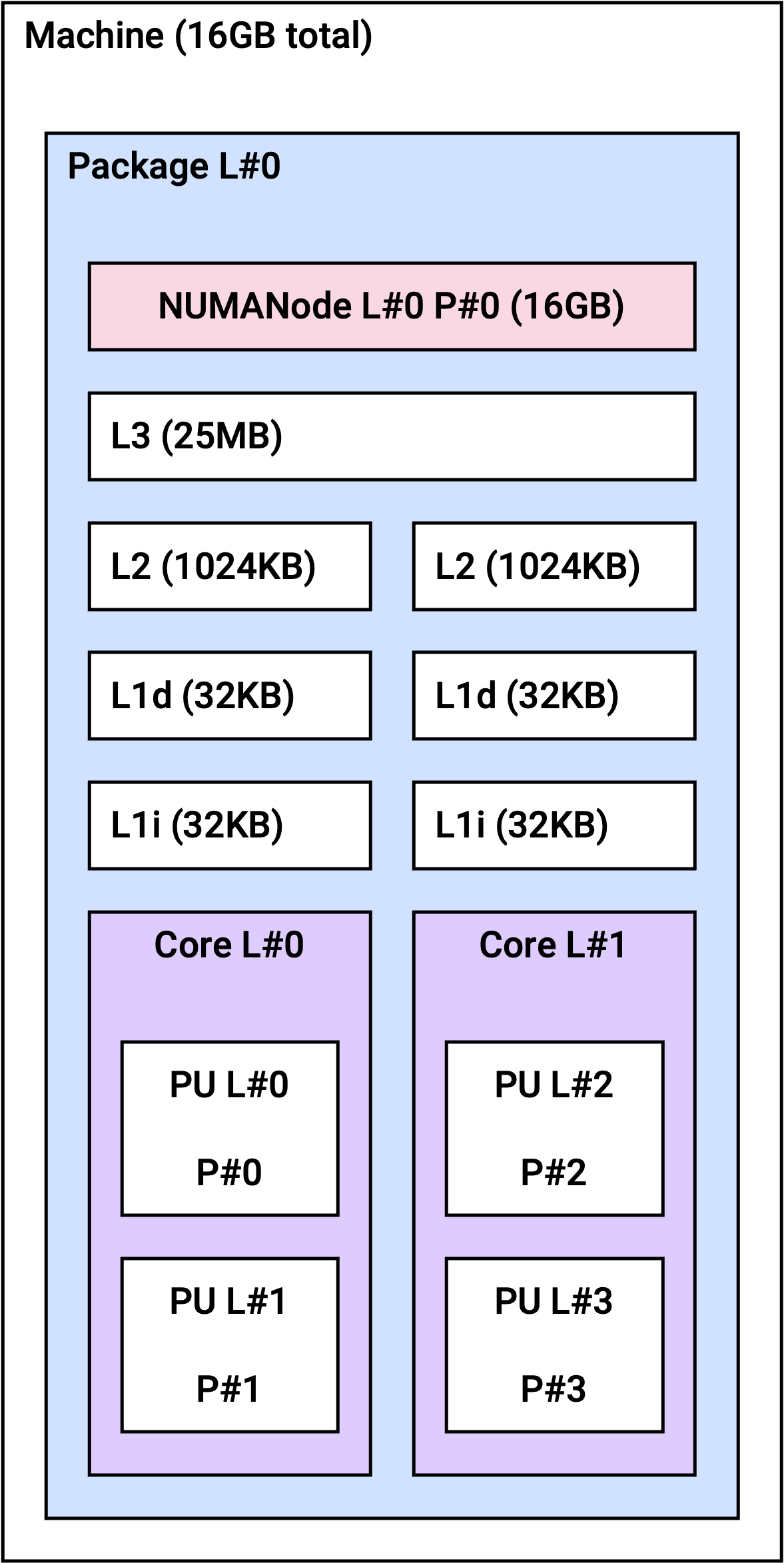

NUMA topology architecture

p2i.6xlarge

p2i.12xlarge

p2i.24xlarge

p1i

p1i instances are powered by Gold 5120 Skylake Intel Xeon Scalable processors and are suitable for advanced computational workloads such as machine learning and HPC applications.

Hardware specifications

- Up to 3.2GHz Gold 5120 Skylake Intel Xeon Scalable processor

- Up to 50Gbps network bandwidth

- Instance sizes supporting up to 56 vCPUs and 512GB memory

- Up to 4 NVIDIA V100 Tensor Core GPUs

- Support for Intel instruction set (AVX, AVX2, AVX-512)

- Support for Intel Turbo Boost Technology 2.0

Detailed information

| Instance size | GPU | vCPU | Memory (GiB) | Network bandwidth (Gbps) |

|---|---|---|---|---|

p1i.baremetal | 4 | 56 | 512 | Up to 50 |

gn1i

gn1i instances are powered by 2nd generation Intel Xeon Scalable processors and equipped with NVIDIA T4 Tensor Core GPUs, making them suitable for machine learning and graphically intensive workloads.

Hardware specifications

- Up to 3.9GHz 2nd generation Intel Xeon Scalable processor (Cascade Lake 5220)

- Up to 50Gpbs network bandwidth

- Instance sizes supporting up to 64 vCPUs and 256GiB memory

- Up to 4 NVIDIA T4 Tensor Core GPUs

- Support for Intel instruction set (AVX, AVX2, AVX-512)

- Support for Intel Turbo Boost Technology 2.0

Detailed information

| Instance size | GPU | vCPU | Memory (GiB) | Network bandwidth (Gbps) |

|---|---|---|---|---|

gn1i.xlarge | 1 | 4 | 16 | Max 10 |

gn1i.2xlarge | 1 | 8 | 32 | Max 10 |

gn1i.4xlarge | 1 | 16 | 64 | Max10 |

gn1i.8xlarge | 1 | 32 | 128 | Max 25 |

gn1i.12xlarge | 4 | 48 | 192 | Max 25 |

gn1i.16xlarge | 1 | 64 | 256 | Max 50 |

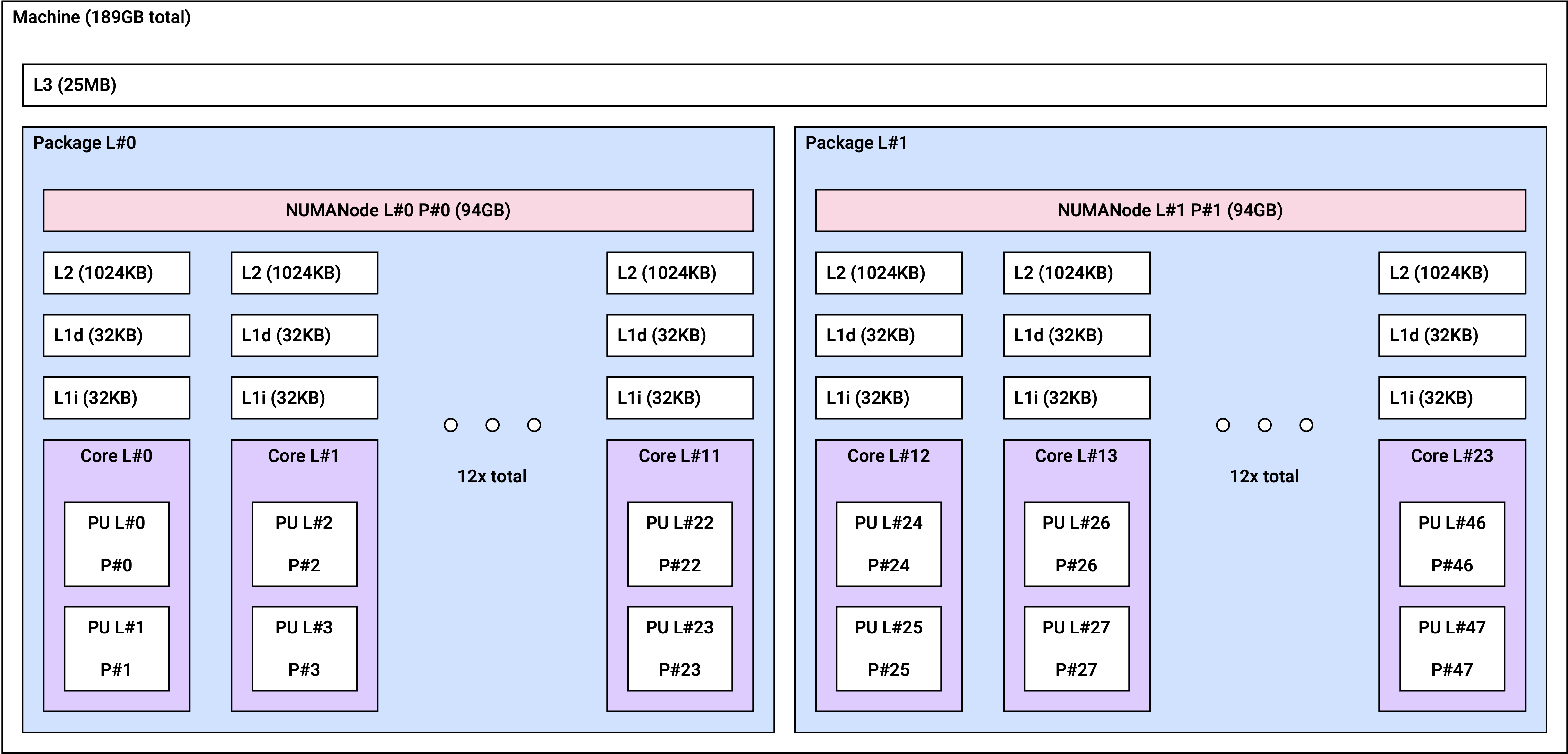

NUMA topology

gn1i instances have the following NUMA topology depending on the instance size.

| Instance size | Number of NUMA domains | Cores per NUMA domain |

|---|---|---|

gn1i.xlarge | 1 | 2 |

gn1i.2xlarge | 1 | 4 |

gn1i.4xlarge | 1 | 8 |

gn1i.8xlarge | 2 | 16 |

gn1i.12xlarge | 2 | 12 |

gn1i.16xlarge | 2 | 16 |

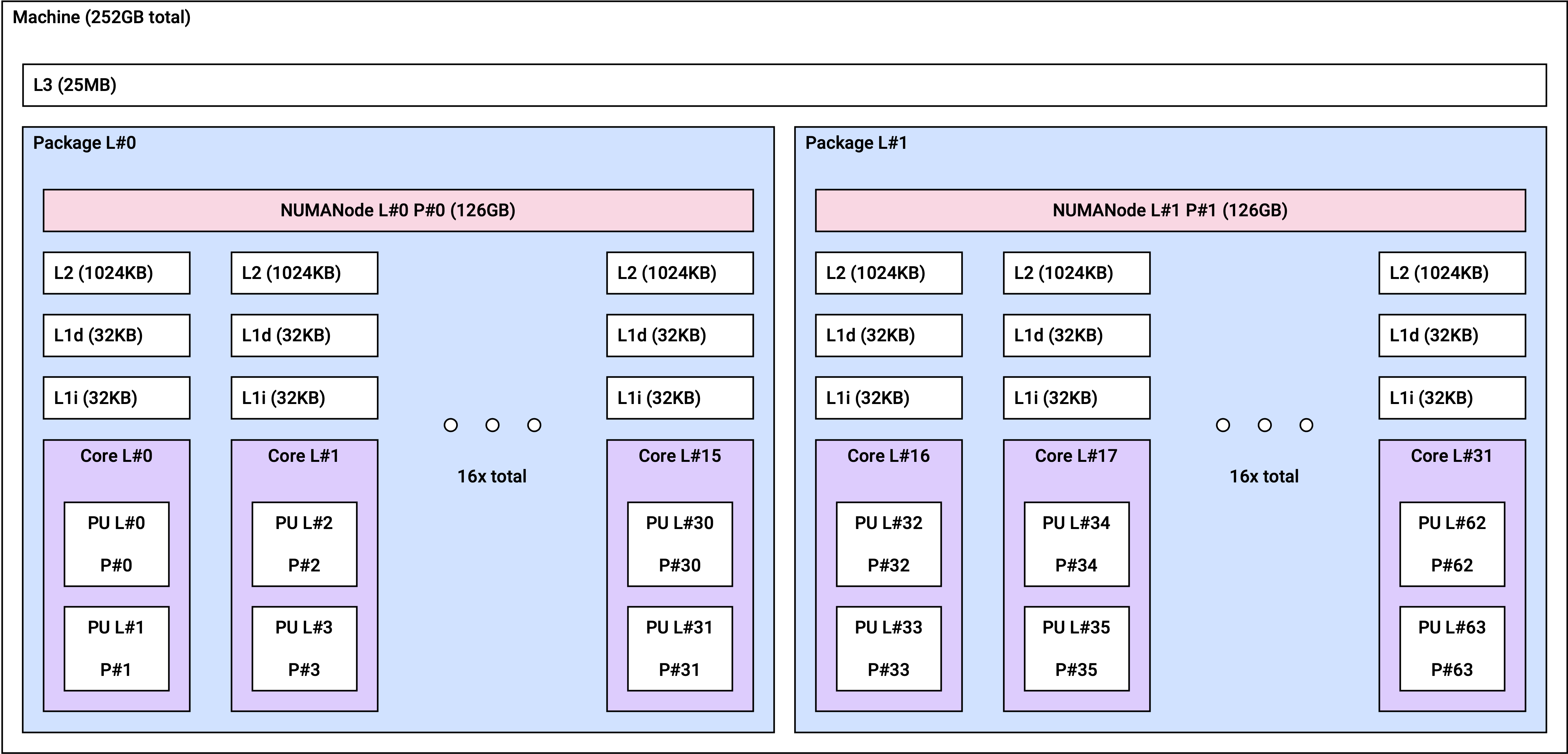

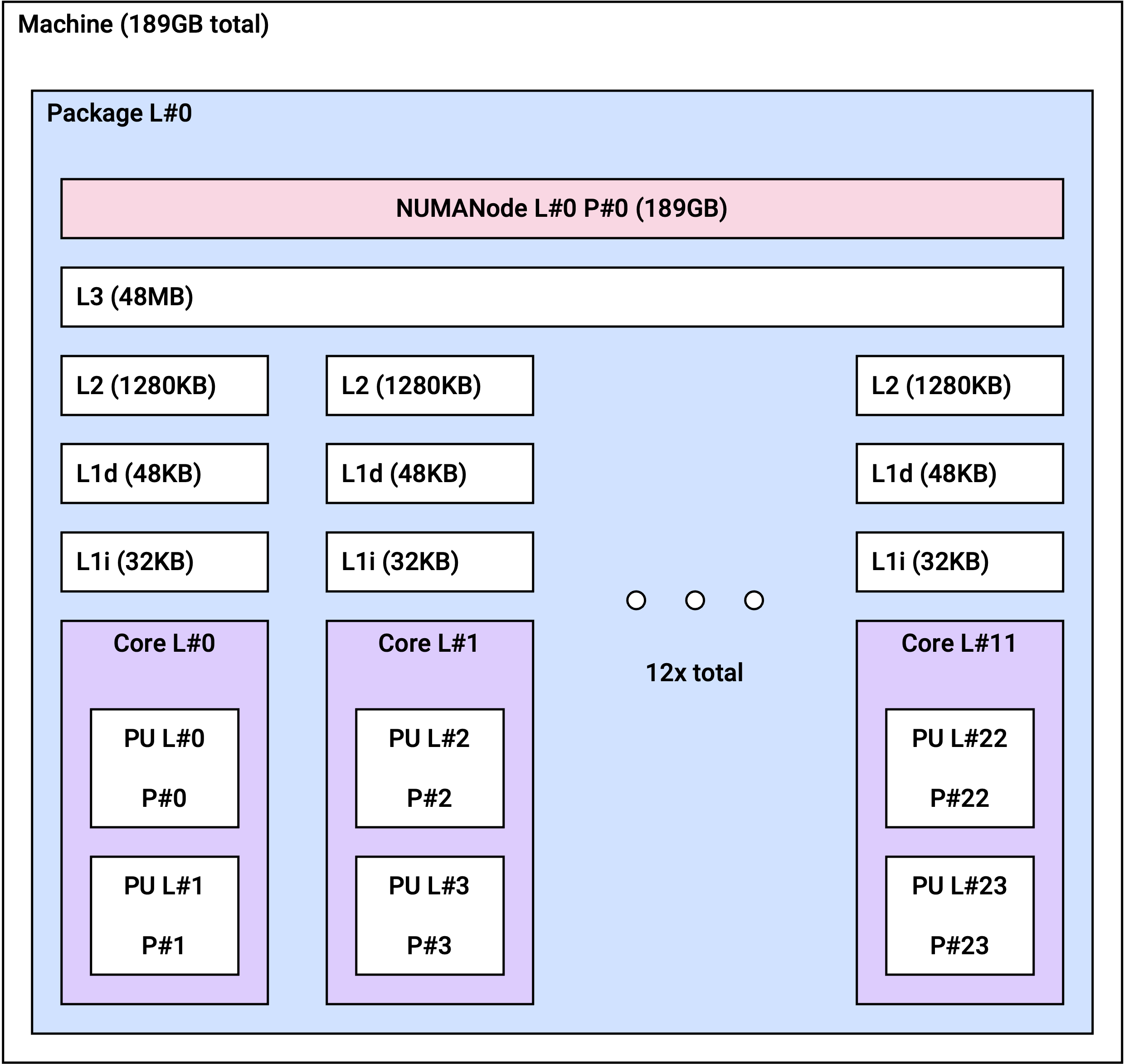

NUMA topology architecture

gn1i.xlarge

gn1i.2xlarge

gn1i.4xlarge

gn1i.8xlarge

gn1i.12xlarge

gn1i.16xlarge