Ingest Cloud Trail logs into Splunk Enterprise

This guide explains how to collect and analyze Cloud Trail logs stored in Object Storage using Splunk Enterprise.

- Estimated time: 40 minutes

- Recommended Operating System: MacOS, Ubuntu

- Prerequisites:

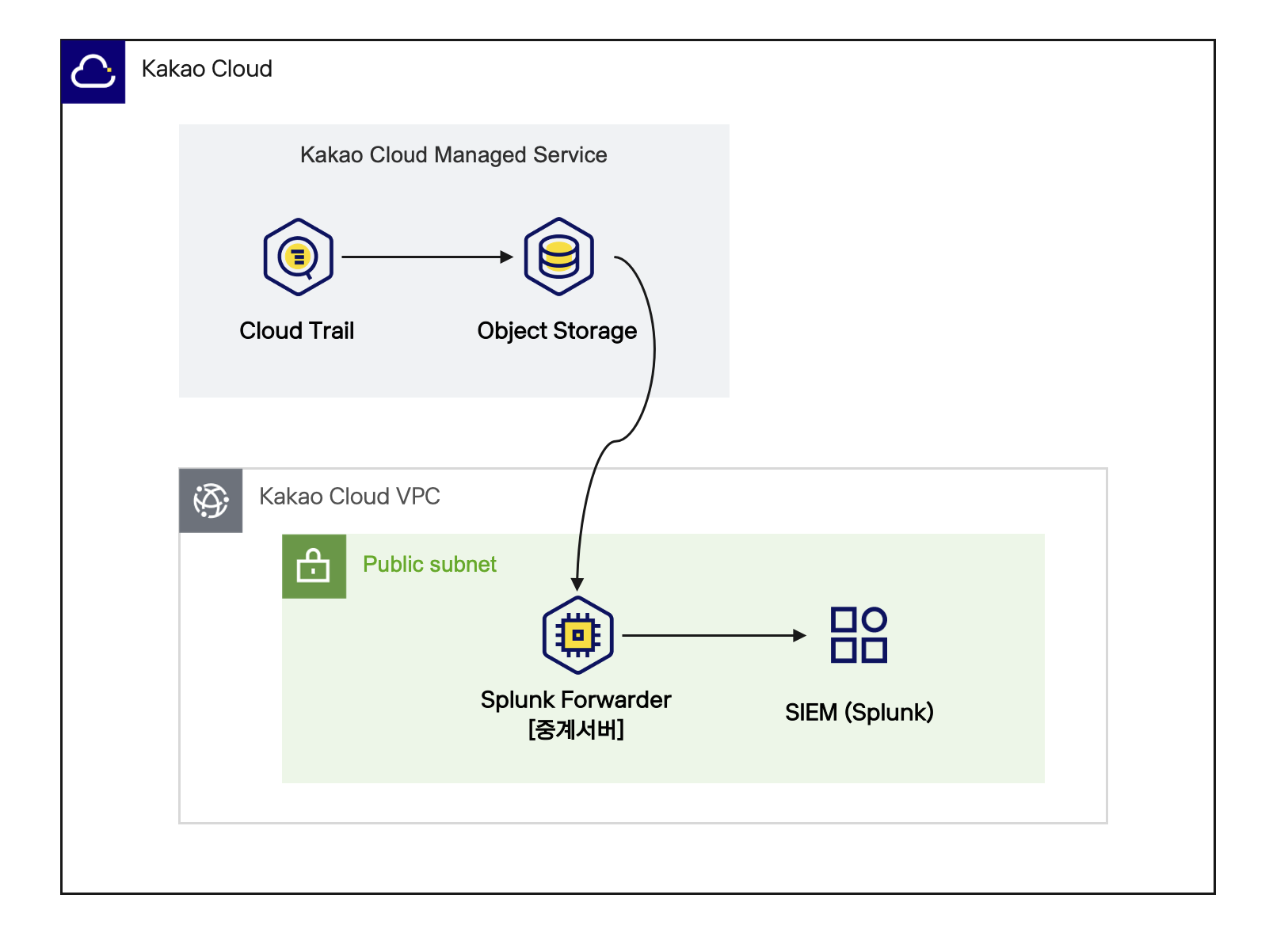

Scenario overview

This scenario provides a detailed guide on how to ingest Cloud Trail logs stored in Object Storage into Splunk Enterprise. This setup enables real-time monitoring and analysis, allowing users to visualize system health and detect anomalies quickly for proactive incident response.

Key topics covered in this tutorial:

- Setting up Splunk Enterprise

- Configuring Splunk Universal forwarder: Installing and configuring the Forwarder agent to forward log files to Splunk Enterprise

- Automating log storage: Creating an automation script that stores Cloud Trail logs from Object Storage to a specific directory monitored by the Forwarder agent

- Ensuring automatic download of missing logs in case of errors

- Registering background processes: Scheduling the script to run as a background process every hour

- Verifying logs in Splunk Enterprise

- Splunk Enterprise: A data analytics platform for enterprises that collects and analyzes various log data in real-time, enabling proactive monitoring and anomaly detection.

- Splunk Universal forwarder: A lightweight data collection agent that collects logs and data from remote servers and forwards them to the Splunk Indexer. This allows automatic retrieval of logs from Object Storage and transmission to Splunk Enterprise for real-time analysis and search.

Architecture Diagram

Architecture Diagram

Before you start

This section outlines the necessary preparatory steps for setting up the Splunk server, configuring the Forwarder agent, and setting up log processing.

1. Creating an Object Storage bucket and enabling Cloud Trail log storage

To utilize Cloud Trail logs, you first need to create an Object Storage bucket where logs will be stored. This bucket serves as the repository for Cloud Trail logs, which will later be forwarded to Splunk for analysis. Additionally, configure the Cloud Trail log storage feature to ensure logs are automatically stored in Object Storage.

2. Setting up the network environment

Configure a VPC and subnets to enable seamless communication between the Splunk Enterprise server and the Forwarder agent.

VPC and Subnet: tutorial

-

Go to KakaoCloud console > Beyond Networking Service > VPC.

-

Select the [+ Create VPC] button and configure the VPC and subnet as follows:

Category Item Value VPC Info VPC Name tutorial VPC IP CIDR Block 10.0.0.0/16 Availability Zone Number of AZs 1 First AZ kr-central-2-a Subnet Configuration Public Subnet per AZ 1 kr-central-2-a Public Subnet IPv4 CIDR Block: 10.0.0.0/20 -

After confirming the topology, selectt the Create button.

- The subnet status will change from

Pending Create>Pending Update>Active. Ensure the status isActivebefore proceeding.

- The subnet status will change from

3. Configuring security groups

Configure security groups to restrict external access while allowing necessary traffic for secure communication between the Splunk server and the Forwarder agent.

Security Group: tutorial-splunk-sg

-

Go to KakaoCloud console > VPC > Security Groups and create a security group with the following settings:

Name Description (Optional) tutorial-splunk-sg Security policy for the Splunk server -

Select [+ Add Rule] and configure inbound rules as follows:

Inbound Rule Item Value splunk inbound policy 1 Protocol TCPSource {Your Public IP}/32Port Number 22 Description (Optional) Allow SSH Access splunk inbound policy 2 Protocol TCPSource {Your Public IP}/32Port Number 8000 Description (Optional) Allow Splunk Enterprise Web Access

Security Group: tutorial-forwarder-sg

-

Go to KakaoCloud console > VPC > Security Groups and create a security group with the following settings:

Name Description (Optional) tutorial-forwarder-sg Security policy for the Forwarder server -

Select [+ Add Rule] and configure inbound rules as follows:

Inbound Rule Item Value forwarder inbound policy 1 Protocol TCPSource {Your Public IP}/32Port Number 22 Description (Optional) Allow SSH Access

This section sets up an environment for collecting and analyzing log data using Splunk Enterprise and the Forwarder. Each step includes creating a Splunk instance, configuring the Forwarder, and writing an automated log transfer script.

Step 1. Setting up a splunk instance

Create an instance to install Splunk Enterprise and configure the basic environment for log collection and analysis.

-

Download the free trial Splunk Enterprise license from the Splunk official website. In this example, select Linux >

.tgzfile and selectt thecopy wget link. -

Create an instance for the Splunk Enterprise server using the KakaoCloud Virtual Machine service.

Splunk instance: tutorial-splunk

-

Go to KakaoCloud console > Beyond Compute Service > Virtual Machine.

-

Refer to the table below to create a VM instance for the Splunk Enterprise server.

Category Item Value Note Basic Info Name tutorial-splunk Quantity 1 Image Ubuntu 24.04 Instance Type m2a.large Volume Root Volume 50 Key Pair {USER_KEYPAIR}⚠️ Store the key pair securely when created.

Lost keys cannot be recovered, and reissuance is required.Network VPC tutorial Security Group tutorial-splunk-sgNetwork Interface 1 New Interface Subnet main (10.0.0.0/20) IP Allocation Automatic -

Associate a public ip with the created Splunk instance.

-

-

Connect to the created Splunk instance via ssh and install Splunk Enterprise using the commands below.

# download

enter the wget command copied from step 1

# extract the downloaded file

tar xvzf splunk-9.4.0-6b4ebe426ca6-linux-amd64.tgz

# start the splunk server

sudo ./splunk/bin/splunk start --accept-license

# at this point, enter the username and password for login.

# example of normal output

waiting for web server at http://127.0.0.1:8000 to be available............ done

if you get stuck, we're here to help.

look for answers here: http://docs.splunk.com

the splunk web interface is at http://host-172-16-0-32:8000 -

Open a browser, go to

http://{splunk_instance_public_ip}:8000, and log in with the username and password set when starting the Splunk server. -

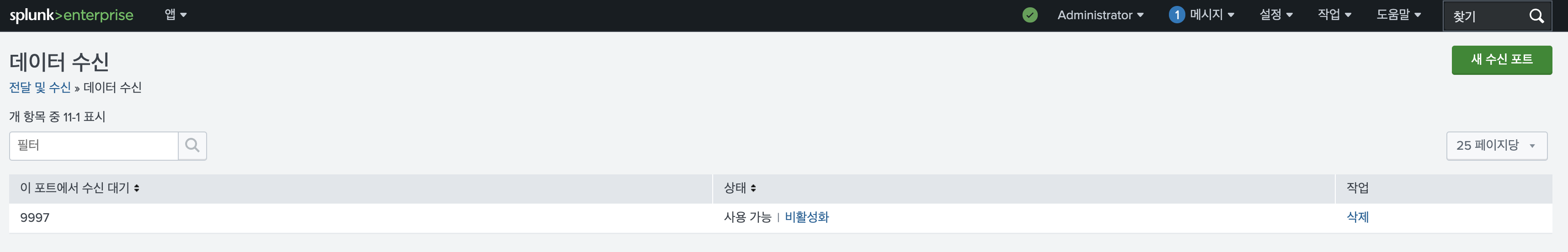

On the Splunk Enterprise page, go to Settings > Forwarding and receiving > Data Receiving, selectt the New Receiving Port button, and create port 9997 (see the image below).

Reference Image

Reference Image

Step 2. Setting Up the Forwarder Instance

To facilitate easy forwarding of logs stored in Object Storage, install the Splunk Universal Forwarder agent. In this tutorial, this agent server is referred to as the Forwarder Instance.

-

Create the Forwarder Instance.

Forwarder Instance: tutorial-forwarder

-

Go to KakaoCloud console > Beyond Compute Service > Virtual Machine.

-

Refer to the table below to create a VM instance for the Forwarder agent.

Category Item Value Note Basic Info Name tutorial-forwarder Quantity 1 Image Ubuntu 24.04 Instance Type m2a.large Volume Root Volume 50 Key Pair {USER_KEYPAIR}⚠️ Store the key pair securely when created.

Lost keys cannot be recovered, and reissuance is required.Network VPC tutorial Security Group tutorial-forwarder-sgNetwork Interface 1 New Interface Subnet main (10.0.0.0/20) IP Allocation Automatic -

Associate a Public IP with the created Forwarder instance.

-

-

Connect to the Forwarder instance via SSH and install the Universal Forwarder agent by referring to the Splunk official documentation.

-

Configure the Forwarder to send log data to the Splunk Enterprise server using the following settings.

# Create a directory to store files that will be forwarded to Splunk Enterprise

sudo mkdir /home/ubuntu/cloudtrail/processed_data

# Configure Splunk to monitor log files within this directory

sudo /home/ubuntu/splunkforwarder/bin/splunk add monitor /home/ubuntu/cloudtrail/processed_data/

# Set up log data forwarding to Splunk Enterprise server

sudo /home/ubuntu/splunkforwarder/bin/splunk add forward-server ${SPLUNK_PRIVATE_IP}:${SPLUNK_PORT}환경변수 설명 SPLUNK_PRIVATE_IP🖌︎ Splunk Instance Private IP SPLUNK_PORT🖌︎ Splunk Receiving Port 9997

- When Splunk Universal Forwarder is working properly, all log files stored in the

processed_datadirectory are automatically sent to the Splunk server. - Network issues may temporarily interrupt communication between the Forwarder and Splunk server. In this case, log files may not be sent to the server or reflected properly. Check the

/splunkforwarder/var/log/splunk/splunkd.logfile to identify the time when communication was interrupted. This file contains detailed information about connection issues between the Forwarder and the server. - If log files were not sent due to a communication failure, copy the log files back to the

processed_datadirectory. Splunk Forwarder will automatically detect this and resend the missing logs to the server. - Even if logs are lost due to network issues, recovery is possible if the original data is stored in the

processed_datadirectory for subsequent action.

- Verify that the settings applied correctly. The file below is the

outputs.conffile of the Splunk Universal Forwarder, containing settings for the target server to send data to.

$ sudo cat /home/ubuntu/splunkforwarder/etc/system/local/outputs.conf

# Example output

[tcpout]

defaultGroup = default-autolb-group

[tcpout:default-autolb-group]

server = $(SPLUNK_PRIVATE_IP:: Splunk Instance Private IP ):$(SPLUNK_PORT:: Splunk Receiving Port 9997)

[tcpout-server://$(SPLUNK_PRIVATE_IP:: Splunk Instance Private IP ):$(SPLUNK_PORT:: Splunk Receiving Port 9997)

- Add the following inbound rules to the security group (tutorial-splunk-sg) for the Splunk instance.

Security Group: tutorial-splunk-sg

- Go to KakaoCloud console > VPC > Security Groups. Refer to the table below to create a security group.

| Name | Description (Optional) |

|---|---|

| tutorial-splunk-sg | Splunk server security policy |

- Select the [+ Add] button at the bottom, configure the inbound rules as shown below, and selectt [Apply].

| Inbound Rule | Item | Value |

|---|---|---|

| splunk inbound policy 3 | Protocol | TCP |

| Source | {Forwarder Server Private IP}/32 | |

| Port | 9997 | |

| Description (Optional) | Port for collecting logs from UF |

Step 3. Creating an Automated Log Storage Script

The Cloud Trail log storage feature saves logs as a single file in an Object Storage bucket every hour. This tutorial provides a script that reads these logs using the Splunk Universal Forwarder agent and transmits them to the Splunk Enterprise server automatically.

- When the script is first executed, it compares the log files in Object Storage with the local log files on the Forwarder agent and downloads all files.

- The most recently modified file is downloaded from Object Storage and extracted.

- The extracted file is converted into a JSON object list, stored in the

processed_datadirectory, and sent to Splunk as events. - If an error occurs in the process, the script automatically detects and downloads any missing log files.

- Note: Steps 2–4 are repeated every 10 minutes.

-

Connect to the Forwarder instance via SSH and configure the Python environment. Use the following commands to create a Python virtual environment and install the necessary libraries.

# Create and activate a Python virtual environment

$ sudo apt install python3-venv

$ python3 -m venv myenv

$ source myenv/bin/activate

# Install required Python libraries

$ pip3 install flask

$ pip3 install boto3

$ pip3 install subprocess32 -

To use AWS CLI in the automated script, install and configure AWS CLI first.

Install AWS CLI$ sudo apt update

$ sudo apt install unzip

$ curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64-2.15.41.zip" -o "awscliv2.zip"

$ unzip awscliv2.zip

$ sudo ./aws/install

$ aws --version

aws-cli/2.15.41 Python/3.11.8 Linux/6.8.0-39-generic exe/x86_64.ubuntu.24 prompt/offcautionThe latest compatible S3 CLI version for KakaoCloud is

2.22.0. Using versions higher than this may cause issues with S3 command requests. This guide installs version2.15.41.Verify the S3 API credentials issued in the preparation steps, and configure AWS CLI using the following command:

Configure AWS CLI$ aws configure

AWS Access Key ID: $(CREDENTIAL_ACCESS_KEY:: S3 API Access Key)

AWS Secret Access Key: $(CREDENTIAL_SECRET_ACCESS_KEY:: S3 API Secret Access Key)

Default region name: kr-central-2

Default output format: -

Open the script file.

Open script filesudo vi /home/ubuntu/cloudtrail/script.py -

Modify the variables in the script below to create an automated script.

infoThe

IAM endpoint URLandObject Storage endpoint URLin the script below can be changed when private endpoints are provided in the future.import requests

import zipfile

import os

from dateutil import parser

import json

import subprocess

import sys

import datetime

import logging

import time

# Global Variable Declaration

BUCKET_NAME = ${BUCKET_NAME}

ACCESS_ID = ${ACCESS_KEY_ID}

SECRET_KEY = ${ACCESS_SECRET_KEY}

PROJECT_ID = ${PROJECT_ID}

# Log Configuration

logging.basicConfig(filename='/home/ubuntu/cloudtrail/process_log.log', level=logging.INFO)

LOG_FILE = "/home/ubuntu/cloudtrail/process_log.log"

# Define Log Function (Includes Timestamp)

def log(level, message):

current_time = datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S")

print(f"{current_time} [{level}] {message}", file=open(LOG_FILE, "a"))

# Function to Retrieve API Token

def get_access_token():

global ACCESS_ID

global SECRET_KEY

url = "https://iam.kakaocloud.com/identity/v3/auth/tokens"

headers = {'Content-Type': 'application/json'}

body = {

"auth": {

"identity": {

"methods": ["application_credential"],

"application_credential": {

"id": ACCESS_ID,

"secret": SECRET_KEY

}

}

}

}

try:

response = requests.post(url, json=body, headers=headers)

response.raise_for_status()

return response.headers['X-Subject-Token']

except requests.exceptions.RequestException as e:

logging.info(f"Error getting access token: {e}")

return None

# Retrieve List of Local Files (compare)

def compare_get_local_zip_files(download_dir):

try:

local_files = [f for f in os.listdir(download_dir) if f.endswith('.zip')]

return local_files

except Exception as e:

print(f"Error getting local files: {e}")

return []

# Retrieve List of .zip Files from Object Storage (compare)

def compare_fetch_api_zip_files(PROJECT_ID, api_token, BUCKET_NAME):

api_url = f"https://objectstorage.kr-central-2.kakaocloud.com/v1/{PROJECT_ID}/{BUCKET_NAME}?format=json"

headers = {"X-Auth-Token": api_token}

try:

response = requests.get(api_url, headers=headers)

response.raise_for_status()

data = response.json()

file_paths = [item["name"] for item in data if item["name"].endswith(".zip")]

return file_paths

except requests.exceptions.RequestException as e:

print(f"Error fetching API data: {e}")

return []

# Download and Log Files (compare)

def compare_download_file_from_objectstorage(BUCKET_NAME, object_name, file_name):

endpoint = "https://objectstorage.kr-central-2.kakaocloud.com"

download_dir = "/home/ubuntu/cloudtrail/"

if not os.path.exists(download_dir):

os.makedirs(download_dir)

full_file_path = os.path.join(download_dir, file_name)

try:

command = [

"aws",

"--endpoint-url", endpoint,

"s3",

"cp",

f"s3://{BUCKET_NAME}/{object_name}",

full_file_path

]

logging.info(f"Attempting to download from: s3://{BUCKET_NAME}/{object_name}")

result = subprocess.run(command, check=True, stdout=subprocess.PIPE, stderr=subprocess.PIPE, text=True)

if result.returncode == 0:

logging.info(f"Downloaded: {file_name}")

compare_decompress_and_process_file(full_file_path, file_name)

else:

logging.error(f"Error during download: {result.stderr}")

except subprocess.CalledProcessError as e:

logging.error(f"Subprocess failed: {e.stderr}")

return

except Exception as e:

logging.error(f"Unexpected error: {e}")

return

# File Decompression and JSON Conversion (compare)

def compare_decompress_and_process_file(zip_file_path, file_name):

try:

with zipfile.ZipFile(zip_file_path, 'r') as zip_ref:

zip_ref.extractall('/home/ubuntu/cloudtrail/')

decompressed_file_name = file_name.rsplit('.', 1)[0]

json_list = compare_convert_to_json_list(f"/home/ubuntu/cloudtrail/{decompressed_file_name}")

if json_list is not None:

compare_save_json_to_file(json_list, file_name)

except zipfile.BadZipFile as e:

print(f"Error decompressing file {zip_file_path}: {e}")

except Exception as e:

print(f"Error processing file {zip_file_path}: {e}")

# Read decompressed file and convert to JSON object list (compare)

def compare_convert_to_json_list(decompressed_file_name):

json_list = []

try:

with open(decompressed_file_name, 'r') as file:

lines = file.readlines()

for line in lines:

try:

json_obj = json.loads(line)

json_list.append(json_obj)

except json.JSONDecodeError as e:

print(f"Error decoding line to JSON: {e}")

continue

return json_list

except Exception as e:

print(f"Error reading file {decompressed_file_name}: {e}")

return None

# Save JSON list to a file (stored separately in 'processed_data' directory)

def compare_save_json_to_file(json_list, file_name):

output_dir = 'processed_data'

if not os.path.exists(output_dir):

os.makedirs(output_dir)

output_file_name = os.path.join(output_dir, file_name.rsplit('.', 1)[0] + '.json')

try:

with open(output_file_name, 'w') as outfile:

json.dump(json_list, outfile, indent=4)

print(f"Saved the JSON list to {output_file_name}")

except Exception as e:

print(f"Error saving JSON to file {output_file_name}: {e}")

# Start initial download script (compare)

def compare_for_download(api_token, local_download_dir):

global BUCKET_NAME

global PROJECT_ID

local_files = compare_get_local_zip_files(local_download_dir)

logging.info(f"Local directory contains .zip files: {local_files}")

remote_files = compare_fetch_api_zip_files(PROJECT_ID, api_token, BUCKET_NAME)

logging.info(f"API retrieved .zip files: {remote_files}")

files_to_download = [f for f in remote_files if f.split('/')[-1] not in local_files]

logging.info(f"Files to be downloaded: {files_to_download}")

for file in files_to_download:

file_name = file.split('/')[-1]

compare_download_file_from_objectstorage(BUCKET_NAME, file, file_name)

# Function to save JSON list to a file (stored separately in the 'processed_data' directory) (main)

def main_save_json_to_file(json_list, file_name):

output_dir = 'processed_data'

if not os.path.exists(output_dir):

os.makedirs(output_dir)

output_file_name = os.path.join(output_dir, file_name.rsplit('.', 1)[0] + '.json')

try:

with open(output_file_name, 'w') as outfile:

json.dump(json_list, outfile, indent=4)

log("SUCCESS", {output_file_name})

except Exception as e:

error_check(f"Error saving JSON to file {output_file_name}: {e}")

# Select the file with the most recent last_modified value (main)

def main_get_most_recent_file(files):

if not files:

error_check("No files found.")

most_recent_file = max(files, key=lambda x: parser.parse(x['last_modified']))

return most_recent_file

# Read the decompressed file and convert it into a JSON object list before saving as a new file (main)

def main_convert_to_json_list(decompressed_file_name):

json_list = []

try:

with open(decompressed_file_name, 'r') as file:

lines = file.readlines()

for line in lines:

try:

json_obj = json.loads(line)

json_list.append(json_obj)

except json.JSONDecodeError as e:

log(f"ERROR", f"Error decoding line to JSON: {e}")

continue

return json_list

except Exception as e:

error_check(f"Error reading file {decompressed_file_name}: {e}")

return None

# Decompress file and process JSON conversion (main)

def main_decompress_and_process_file(zip_file_path, file_name):

try:

with zipfile.ZipFile(zip_file_path, 'r') as zip_ref:

zip_ref.extractall('/home/ubuntu/cloudtrail/')

decompressed_file_name = file_name.rsplit('.', 1)[0]

json_list = main_convert_to_json_list(f"/home/ubuntu/cloudtrail/{decompressed_file_name}")

if json_list:

main_save_json_to_file(json_list, file_name)

except zipfile.BadZipFile as e:

error_check(f"Error decompressing file {zip_file_path}: {e}")

except Exception as e:

error_check(f"Error processing file {zip_file_path}: {e}")

# Download file from Object Storage (main)

def main_download_file_from_objectstorage(BUCKET_NAME, object_name, file_name):

endpoint = "https://objectstorage.kr-central-2.kakaocloud.com"

download_dir = "/home/ubuntu/cloudtrail/"

if not os.path.exists(download_dir):

os.makedirs(download_dir)

full_file_path = os.path.join(download_dir, file_name)

try:

command = [

"aws",

"--endpoint-url", endpoint,

"s3",

"cp",

f"s3://{BUCKET_NAME}/{object_name}",

full_file_path

]

result = subprocess.run(command, check=True, stdout=subprocess.PIPE, stderr=subprocess.PIPE, text=True)

log("INFO", {full_file_path})

main_decompress_and_process_file(full_file_path, file_name)

except subprocess.CalledProcessError as e:

error_check(f"Error occurred while downloading the file: {e.stderr}")

# Retrieve file list from Object Storage API

def main_fetch_files_from_objectstorage(PROJECT_ID, BUCKET_NAME, access_token):

url = f'https://objectstorage.kr-central-2.kakaocloud.com/v1/{PROJECT_ID}/{BUCKET_NAME}?format=json'

headers = {

'X-Auth-Token': access_token

}

try:

response = requests.get(url, headers=headers)

response.raise_for_status()

return response.json()

except requests.exceptions.RequestException as e:

error_check(f"Error fetching files: {e}")

return []

# Decompress ZIP file (main)

def main_decompress_zip(file_name):

file_dir = os.path.dirname(file_name) # Directory where the compressed file is located

decompressed_file_name = file_name.rstrip('.zip') # Remove .zip extension

try:

# Decompress file

with zipfile.ZipFile(file_name, 'r') as zip_ref:

zip_ref.extractall(file_dir) # Extract in the same directory

# Get the path of the decompressed file

decompressed_file_path = os.path.join(file_dir, decompressed_file_name)

return decompressed_file_path

except Exception as e:

error_check(f"Error decompressing file: {e}")

# Download and decompress file (main)

def main_process_file_for_download(PROJECT_ID, api_token, BUCKET_NAME):

files = main_fetch_files_from_objectstorage(PROJECT_ID, BUCKET_NAME, api_token)

if not files:

error_check("No files found.")

most_recent_file = main_get_most_recent_file(files)

if not most_recent_file:

error_check("No files found.")

return None, None

object_name = most_recent_file['name']

file_name = object_name.split('/')[-1]

main_download_file_from_objectstorage(BUCKET_NAME, object_name, file_name)

if file_name.endswith('.zip'):

decompressed_file_name = main_decompress_zip(file_name)

if decompressed_file_name:

return decompressed_file_name, file_name

else:

error_check("Failed to decompress the file.")

else:

return file_name, None

# Main Script (main)

def main_script(api_token):

global BUCKET_NAME

global PROJECT_ID

decompressed_file_name, original_file_name = main_process_file_for_download(PROJECT_ID, api_token, BUCKET_NAME)

if not decompressed_file_name:

return error_check("No file was processed.")

json_list = main_convert_to_json_list(decompressed_file_name)

if not json_list:

return error_check("No JSON data found.")

main_save_json_to_file(json_list, original_file_name)

# Function to be called in case of an error (main)

def error_check(error_message):

global BUCKET_NAME

log("ERROR", error_message)

api_token = get_access_token()

local_download_dir = "/home/ubuntu/cloudtrail/"

BUCKET_NAME = BUCKET_NAME

compare_for_download(api_token, local_download_dir)

# Function to execute every hour at the 10-minute mark

def wait_until_next_hour_10():

now = datetime.datetime.now()

next_hour_10 = now.replace(minute=10, second=0, microsecond=0)

if now > next_hour_10:

next_hour_10 += datetime.timedelta(hours=1)

wait_time = (next_hour_10 - now).total_seconds()

log("INFO", f"Waiting for {wait_time} seconds until the next execution...")

time.sleep(wait_time)

# Main function

if __name__ == "__main__":

# Initial download script

api_token = get_access_token()

local_download_dir = "/home/ubuntu/cloudtrail/"

compare_for_download(api_token, local_download_dir)

# Primary log storage script

log("INFO", "Main script started")

main_script(api_token)

log("INFO", "Main script finished")

while True:

# Wait until the 10-minute mark every hour

wait_until_next_hour_10()

# Execute the main script every 10 minutes

log("INFO", "Main script started")

api_token = get_access_token()

main_script(api_token)

log("INFO", "Main script finished")환경변수 설명 BUCKET_NAME🖌︎ Object Storage Bucket Name ACCESS_KEY_ID🖌︎ Modify User Access Key ID ACCESS_SECRET_KEY🖌︎ Modify User Access Secret Key PROJECT_ID🖌︎ Project ID

Step 4. Run Background Process

-

Add appropriate permissions to the script and log file.

# Create a log file

sudo touch /home/ubuntu/cloudtrail/process_log.log

# Set permissions for the log file

sudo chmod 666 /home/ubuntu/cloudtrail/process_log.log

# Set execute permissions for the script file

sudo chmod +x /home/ubuntu/cloudtrail/script.py -

Run the script as a background process.

nohup bash /home/ubuntu/cloudtrail/script.py > /dev/null 2>&1 & -

Check the results.

-

The script generates the latest log files in JSON format in the

/home/ubuntu/cloudtrail/processed_datadirectory every hour at the 10th minute. -

Verify the log file creation using the following command:

ls /home/ubuntu/cloudtrail/processed_data -

Monitor the script execution status and log output:

tail -f /home/ubuntu/cloudtrail/process_log.log

Example: /home/ubuntu/cloudtrail/process_log.log File ContentINFO:root:Local directory .zip files: ['trail_XXXX-XX-XX-XX.zip', 'trail_XXXX-XX-XX-XX.zip', ...]

INFO:root:.zip files retrieved from API: ['trail_XXXX-XX-XX-XX.zip', 'trail_XXXX-XX-XX-XX.zip', ...]

INFO:root:Download list: []

[INFO] Main script started

[INFO] {'/home/ubuntu/cloudtrail/trail_XXXX-XX-XX-XX.zip'}

[SUCCESS] {'processed_data/trail_XXXX-XX-XX-XX.json'}

[SUCCESS] {'processed_data/trail_XXXX-XX-XX-XX.json'}

[INFO] Main script finished

[INFO] Waiting 453.866015 seconds until next execution...

[INFO] Main script started

[INFO] {'/home/ubuntu/cloudtrail/trail_XXXX-XX-XX-XX.zip'}

[SUCCESS] {'processed_data/trail_XXXX-XX-XX-XX.json'}

[SUCCESS] {'processed_data/trail_XXXX-XX-XX-XX.json'}

[INFO] Main script finished

[INFO] Waiting 3597.749401 seconds until next execution...

[INFO] Main script started

[INFO] {'/home/ubuntu/cloudtrail/trail_XXXX-XX-XX-XX.zip'}

[SUCCESS] {'processed_data/trail_XXXX-XX-XX-XX.json'}

[SUCCESS] {'processed_data/trail_XXXX-XX-XX-XX.json'}

[INFO] Main script finished

[INFO] Waiting 3598.177826 seconds until next execution... -

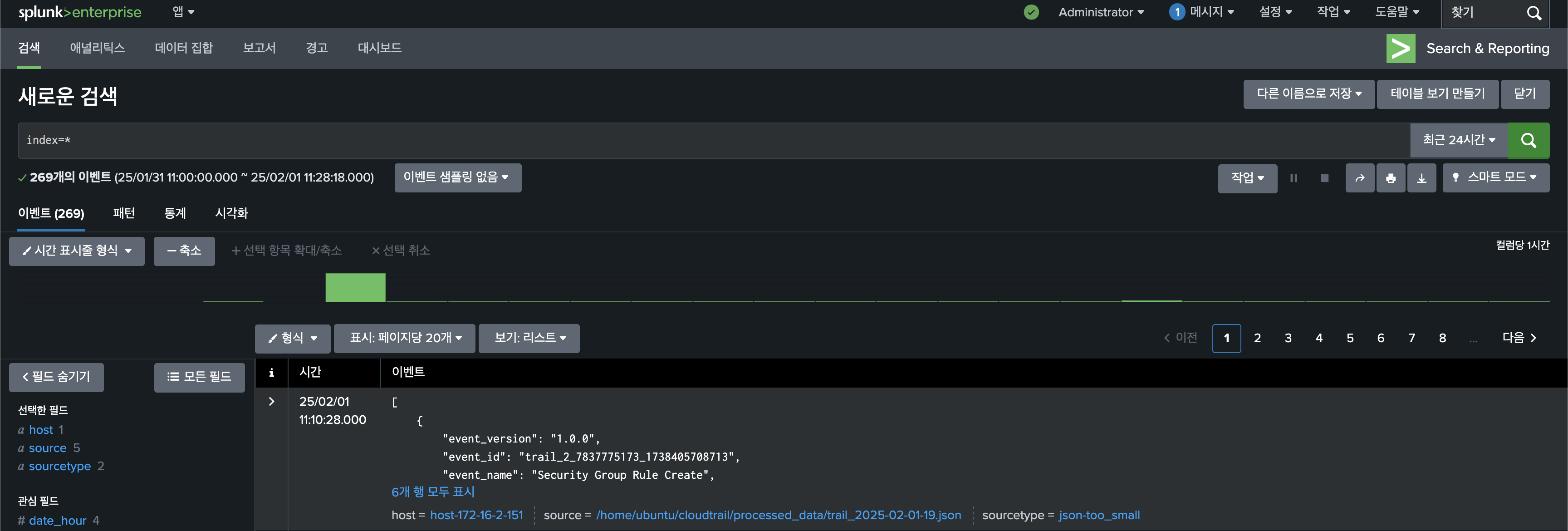

Step 5. Check Logs in Splunk Enterprise

Access the Splunk Enterprise web UI and log in to check the CloudTrail logs.

Example